Governing Autonomous AI Agents: Lessons from Ethics and Community Leadership

The Autonomy Challenge

Autonomous AI agents promise efficiency in tasks like planning and decision-making, but unchecked freedom risks ethical lapses and security breaches. ACM publications highlight that dense oversight fails against adaptive agents, advocating user-centric models where humans retain progressive control roles. Community leadership principles—shared norms and accountability—provide blueprints for aligning agent behaviour with societal values.

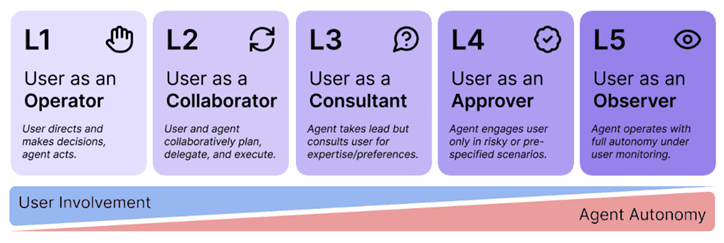

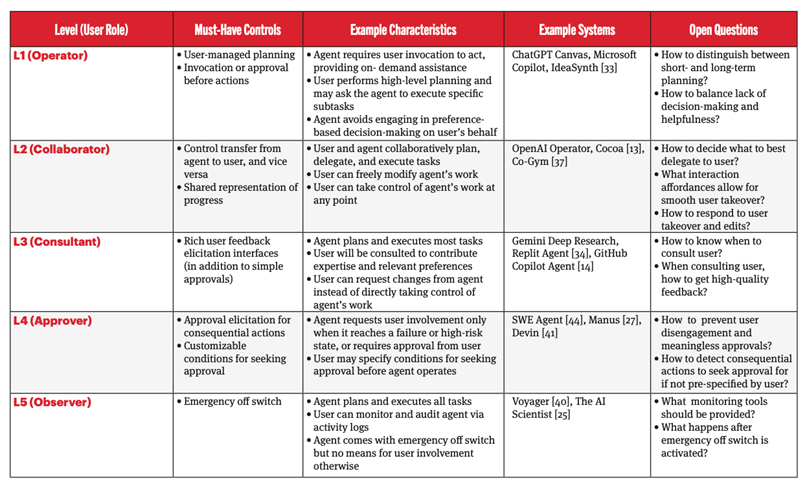

Figure 2:Autonomy levels diagram showing user roles: operator, collaborator, consultant, approver, observer [source: knightcolumbia]

Key Governance Frameworks

ACM research proposes layered strategies across the agent lifecycle:

Tiered Autonomy Levels: Classify agents by user involvement, from hands-on operation (Level 1) to passive observation (Level 5), enabling risk-based certification like "autonomy certificates" for multi-agent safety.

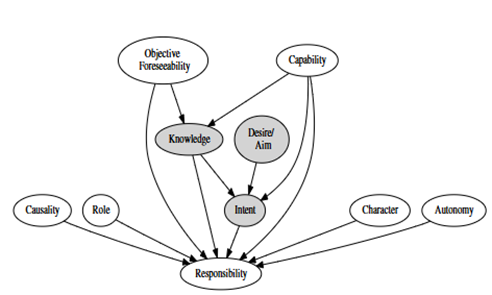

Causal Responsibility Attribution: Nine factors—including intent, foreseeability, and capability—guide blame assignment, ensuring developers embed traceable decision paths.

Security Hardening: Zero-trust access, prompt validation, and adversarial training counter threats like injections, treating agents as untrusted network actors.acm+1

- Autonomy Levels → Core elements: User roles + certificates → Source: Knight/ACM-inspired

- Causal Attribution → Core elements: 9 responsibility factors → Source: AAMAS Proceedings

- Ethical Governance → Core elements: Norms + audits → Source: CACM Ethics Bridge

Practical Implementation Steps

- Design with explainability: Use sparse autoencoders to map agent "concepts" for surgical oversight.

- Deploy progressive checks: Start with collaborative modes, escalate to full autonomy only post-audit.

- Monitor via ensembles: Combine agent outputs with human veto points for high-stakes tasks.

Figure 3: Causal responsibility flowchart with factors like causality and intent

Future Directions

Elastic governance evolves agents like community members—granting responsibility based on proven reliability. ACM calls for global standards to prevent a "Matthew Effect" where pruned ethics favours majority use cases over rare, critical ones. This shift positions responsibility as innovation's foundation, not constraint.

References

- https://knightcolumbia.org/content/levels-of-autonomy-for-ai-agents-1

- https://dl.acm.org/doi/10.1145/544741.544856

- https://cdn.openai.com/papers/practices-for-governing-agentic-ai-systems.pdf

- https://dl.acm.org/doi/10.1145/3514094.3534140

- https://dl.acm.org/doi/10.1145/3507910

- https://dl.acm.org/doi/10.5555/3306127.3331665

- https://dl.acm.org/doi/10.1145/1787234.1787248

- https://dl.acm.org/doi/proceedings/10.1145/375735

- https://arxiv.org/pdf/2502.02649.pdf

- https://dl.acm.org/doi/abs/10.1145/3514094.3534140

- https://cacm.acm.org/opinion/responsible-ai/

- https://www.ifaamas.org/Proceedings/aamas2025/pdfs/p1.pdf

- http://www.diva-portal.org/smash/record.jsf?pid=diva2%3A2020224

- https://dl.acm.org/doi/10.1145/3716628

- https://cacm.acm.org/blogcacm/strengthening-security-throughout-the-ml-ai-lifecycle/

- https://dl.acm.org/doi/10.5555/3709347.3743508